Data is the lifeblood of modern businesses. As enterprises grow, so do their data needs. Managing massive volumes of data across multiple platforms can be challenging, often leading to bottlenecks in analytics and reporting. To harness its full potential, organizations need efficient tools to extract, transform, and load (ETL) it into their data warehouses.

With countless ETL tools available, choosing the right one can be overwhelming. Here, we highlight the top 12 ETL tools that have proven their mettle in the industry.

What is ETL in an Enterprise Context?

ETL stands for Extract, Transform, Load—the process of gathering data from various sources, cleaning and organizing it, and loading it into a system for analysis. Data often comes from diverse sources for Large organizations, including CRM systems, e-commerce platforms, ERPs, and IoT devices. ETL is the backbone of data-driven decision-making, helping companies move from raw data to actionable insights.

In an enterprise context, ETL processes support complex requirements, such as data compliance, real-time analytics, and integrating historical data with live data streams. This structured data management is crucial for effective analytics and reporting.

How ETL Works?

Extract: Data is gathered from various sources (e.g., customer data from a CRM like Salesforce, transaction records from an ERP).

Transform: The data is cleaned, organized, and formatted to fit the analytical requirements (e.g., removing duplicates, standardizing dates).

Load: Transformed data is loaded into a target system (like a data warehouse) where teams can analyze it.

A retail giant, Walmart relies heavily on ETL to manage data from millions of daily transactions. By extracting data from POS systems across stores worldwide, transforming it to identify trends and patterns, and loading it into their data warehouse, Walmart gains real-time insights into customer behavior. This information helps them optimize inventory and improve customer experience.

What are ETL tools and how do they help data teams?

ETL tools automate the extract, transform, and load stages, which makes handling complex data pipelines much easier. Data teams use these tools to streamline workflows, ensuring that data flows from multiple sources into a central data storage system without manual intervention. By automating repetitive tasks, ETL tools free up data teams to focus on more complex data analysis, model-building, and actionable insights.

Coca-Cola uses ETL tools to gather data from various channels, including customer loyalty apps, retail partnerships, and social media campaigns. By integrating these data streams, Coca-Cola can track brand sentiment, monitor customer interactions, and gain insights into product performance across regions. This unified view empowers the marketing team to make faster, data-driven decisions.

| Benefit | Impact on Data Teams |

| Automation | Reduces time spent on manual data handling |

| Data Quality | Provides consistency across datasets |

| Efficiency | Streamlines data access, enabling faster insights |

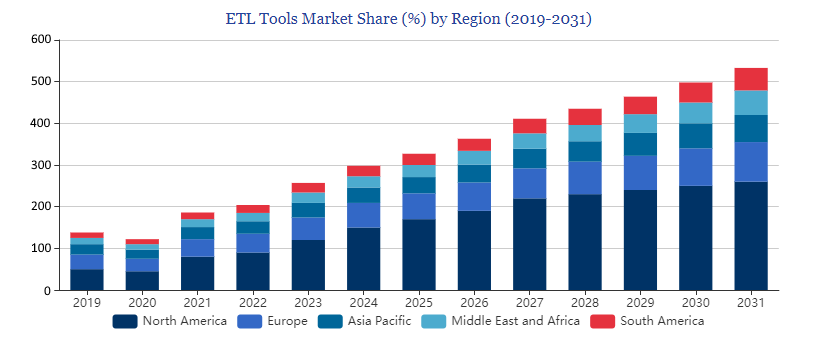

The Global ETL Tools market is projected to witness significant expansion from 2019 to 2031.

North America is projected to dominate the market throughout the forecast period, with a significant market share of around 30% in 2019 and expected to grow to approximately 35% by 2031. Europe is projected to increase to nearly 30% by 2031. Asia Pacific is expected to rise to around 25% by 2031. Middle East, Africa, and South America are projected to have smaller market shares, with South America expected to grow at a slightly faster pace than the Middle East and Africa.

The ETL tools market is experiencing steady growth in 2024, and strategic initiatives from key players are poised to further accelerate market expansion over the forecast period.

Types of ETL tools

ETL tools vary widely based on data volume, integration needs, real-time processing, and storage environments. Here are the primary types of ETL tools used in enterprises.

1. Batch Processing ETL Tools

Process data in bulk at scheduled intervals. Informatica is popular for batch processing, handling large data loads for enterprises like Wells Fargo, where data processing happens at set times daily for regulatory compliance.

2. Real-Time ETL Tools

Integrate and transform data continuously, providing up-to-date insights. Hevo is used by companies like Freshly (a meal delivery service) to monitor changes in real-time, allowing them to adapt their supply chain to fluctuating demand.

3. Cloud-Based ETL Tools

Optimized for cloud data storage and analysis, integrating with platforms like AWS, Google Cloud, and Azure. Matillion works well with cloud environments like Amazon Redshift, helping companies like Siemens manage their cloud data warehouse.

4. Open-Source ETL Tools

Free, customizable tools for developers who need flexibility in pipeline management. Apache Airflow is used by tech companies like Slack to build custom workflows tailored to their unique data engineering requirements.

| Comparing Types of ETL Tools | |||

| ETL Tool Type | Pros | Cons | Tool Example |

| Batch Processing | Efficient for large datasets | Not ideal for real-time needs | Informatica |

| Real-Time | Up-to-date data | Higher infrastructure costs | Hevo |

| Cloud-Based | Scalable, flexible for cloud storage | Limited on-premises support | Matillion |

| Open-Source | Customizable and cost-effective | Requires development expertise | Apache Airflow |

Choosing the Right ETL Tool

Selecting an ETL tool depends on the specific needs of a business. Considerations include scalability, cost, data integration needs, compatibility with existing infrastructure, and the level of technical skill within the data team. Here’s a breakdown of factors to consider.

1. Data Volume and Scalability

- High Volume: For enterprises handling large data loads, tools like Snowflake offer seamless scalability.

- Variable Volume: Small to mid-sized businesses with variable data loads may find Fivetran cost-effective and easy to scale.

2. Integration Needs

- Cloud Environments: Companies moving data to cloud warehouses will benefit from tools like Matillion or Azure Data Factory.

- On-Premises Compatibility: Organizations with in-house data centers may need hybrid tools like Talend.

3. Budget and Pricing

- Tools like DBT offer an open-source option for budget-conscious teams, while Informatica provides robust features at a premium price, catering to enterprises needing advanced data governance.

4. Technical Expertise

- Non-Technical Teams: For teams with limited coding experience, tools with a visual interface like Hevo are ideal.

- Technical Teams: For advanced users, Apache Airflow provides extensive customization and flexibility but requires Python expertise.

| Decision-Making Framework | ||

| Factor | Best Tool Choice | Detail |

| High Data Volume | Snowflake | Optimized for large datasets |

| Cloud Storage | Matillion, Azure Data Factory | Integrates seamlessly with cloud platforms |

| Low Budget | DBT | Open-source, cost-effective |

| Non-Technical | Hevo | No-code interface for ease of use |

Top 12 ETL Tools Enterprises

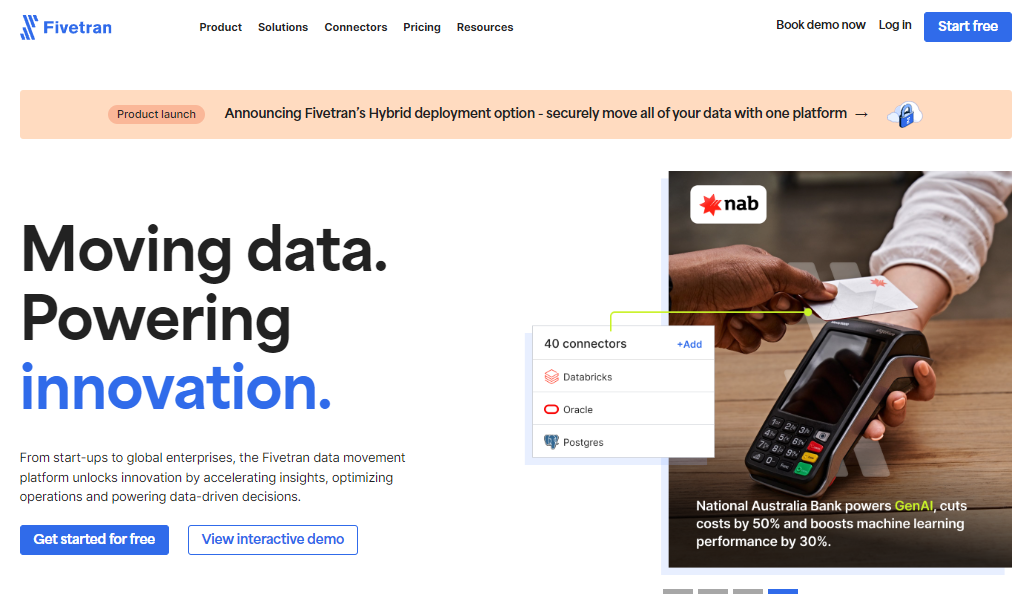

1. Fivetran

Fivetran is a cloud-based ETL tool known for its simplicity and automation in data integration. It was designed with the philosophy of "set it and forget it," focusing on making the data pipeline as hands-off as possible.

Fivetran connects applications, databases, and event sources directly to your data warehouse, performing transformations in real-time as data arrives. It’s particularly popular with companies that prioritize speed, minimal setup, and automated data sync.

How Fivetran Works

Fivetran follows an "extract-load-transform" (ELT) model. It extracts data from sources, loads it directly into a warehouse, and then applies transformations within the warehouse environment rather than in the pipeline. This approach reduces latency and allows analysts to work with raw data without waiting for complex transformation processes.

Key Features

- Automated Schema Migrations: Fivetran automatically adjusts data schemas in the warehouse if the source schema changes, reducing the need for manual intervention.

- Real-Time Data Sync: Fivetran ensures real-time or near real-time data availability in the warehouse, suitable for applications requiring up-to-date analytics.

- Wide Range of Connectors: It offers over 150 pre-built connectors for common data sources like Salesforce, Google Analytics, and Shopify.

- High Data Accuracy: The tool emphasizes data quality and employs rigorous error-checking to ensure data reliability.

DocuSign, the popular electronic signature company, uses Fivetran to automate the movement of customer data from multiple systems into its Snowflake data warehouse. Previously, DocuSign's data team spent significant time manually updating and managing the data pipeline to ensure consistency across platforms. With Fivetran, DocuSign now has a more reliable and real-time data flow, enabling analysts to work with the most current data for customer insights and product development.

By automating schema updates and error correction, Fivetran has allowed DocuSign’s data engineers to focus on analysis rather than pipeline maintenance, which has accelerated their analytics and reporting process.

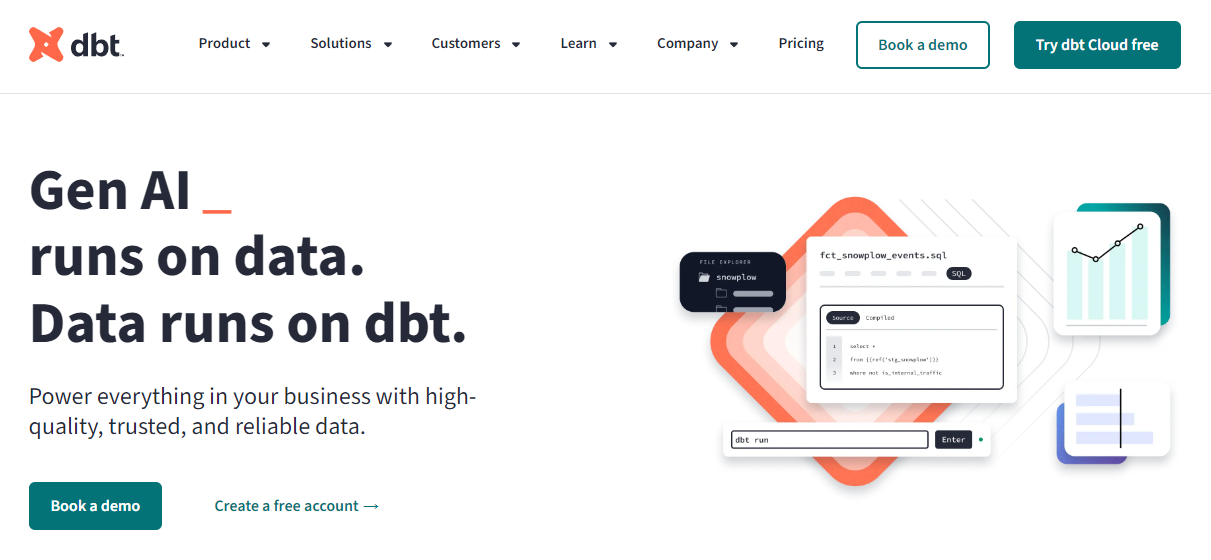

2. DBT (Data Build Tool)

DBT (Data Build Tool) is a transformation-focused ETL tool designed for analytics engineers.

It’s an open-source platform primarily used to transform data within cloud data warehouses, making it ideal for organizations relying on SQL for data transformations. DBT encourages a “transform-first” approach, allowing users to build data models directly in their warehouse.

How DBT Works

DBT operates on top of a cloud data warehouse like Snowflake, BigQuery, or Redshift. It pulls raw data from sources, performs SQL-based transformations, and stores the results in the warehouse. This approach enables a simplified workflow for SQL-skilled teams, reducing the need for complex ETL pipelines.

Key Features

- SQL-Based Transformations: DBT allows analysts to define transformations with SQL, creating tables and views without leaving the warehouse.

- Version Control Integration: Connects with Git, enabling collaborative and version-controlled transformations.

- Automated Testing: Built-in data testing ensures the quality of data transformations.

- Documentation Generation: Automatically generates documentation for all models, making data pipelines transparent.

JetBlue uses DBT to streamline data operations, including customer service and flight analytics. Previously, JetBlue’s data team manually managed transformations, resulting in inconsistent insights across departments. DBT now allows JetBlue’s analysts to create standardized data models, enabling a unified view of customer data. With DBT, they’ve significantly reduced errors and improved data reliability, resulting in a better understanding of customer needs and more efficient operations.

3. Informatica

Informatica is a comprehensive data integration tool known for its enterprise-grade capabilities. Often used by large corporations, Informatica offers a broad suite of features for data extraction, transformation, and integration across complex environments.

With its scalability, Informatica is popular in industries with rigorous data compliance requirements, such as healthcare and finance.

How Informatica Works

Informatica is built to support hybrid environments. It extracts data from on-premises or cloud sources, transforms it according to pre-set business rules, and loads it into target destinations. Informatica’s robust interface allows administrators to monitor the entire data lifecycle and ensure compliance.

Key Features

- AI-Powered Data Insights: Uses AI to optimize data management and detect anomalies.

- Data Quality Tools: Advanced data cleaning, profiling, and governance features.

- Scalability: Handles high data volumes, making it suitable for enterprise-level needs.

- Hybrid and Multi-Cloud Support: Integrates with both on-premises and cloud platforms.

Kaiser Permanente, a major healthcare provider, uses Informatica to integrate patient data from multiple hospital systems. With strict data governance and privacy regulations, Kaiser requires high accuracy and compliance. Informatica enables seamless data integration, allowing them to provide consistent patient insights across their facilities.

4. Apache Airflow

Apache Airflow, an open-source workflow orchestrator, simplifies the management and scheduling of complex data processing tasks.

It is widely used in tech environments for creating custom ETL workflows that require high levels of customization and flexibility.

How Airflow Works

Airflow is code-based, using Python scripts to define tasks and workflows. It allows developers to set dependencies, schedules, and error-handling mechanisms for tasks. Airflow is popular for orchestrating workflows rather than for data extraction and transformation alone.

Key Features

- Dynamic Task Scheduling: Provides detailed scheduling capabilities for complex workflows.

- Open-Source and Customizable: Adaptable to specific business needs.

- Scalable Architecture: Designed to handle large-scale data processing.

- Integrates with Other ETL Tools: Works alongside tools like Fivetran and DBT.

Lyft uses Apache Airflow to manage its ETL workflows, including data collection from user interactions and ride details. By automating data pipelines, Lyft can ensure timely data updates for their analytics teams, who rely on accurate data to monitor ride demand and optimize driver routes.

5. Matillion

Matillion is a cloud-native ETL solution specifically built for modern cloud data warehouses like Amazon Redshift, Google BigQuery, and Snowflake.

It provides a visual, drag-and-drop interface for building data pipelines, making it accessible even to those with minimal coding knowledge. Matillion is particularly well-suited for organizations looking to migrate data to the cloud and streamline data transformation within cloud platforms.

How Matillion Works

Matillion follows the ETL approach but is optimized to perform transformation steps directly in the cloud data warehouse, rather than in transit. This setup maximizes efficiency for cloud environments, allowing data analysts to process and transform data close to the storage layer, reducing latency and boosting performance.

Key Features

- Native Cloud Integration: Directly connects with AWS, Google Cloud, and Azure, offering seamless cloud compatibility.

- User-Friendly Interface: The user-friendly drag-and-drop interface streamlines the construction of complex data transformation workflows.

- High-Speed Data Loading: Optimized for rapid data ingestion into cloud warehouses.

- Pre-Built Connectors: Offers a library of connectors for popular SaaS applications, including Salesforce, Slack, and Jira.

Fox Corporation uses Matillion to manage data integration for its cloud-based analytics. Previously, Fox's data teams manually handled data transformations from various media sources, slowing down reporting processes. With Matillion, they now automate data ingestion from streaming platforms and social media channels, which enables quicker insights into audience preferences and more targeted content recommendations.

6. Talend

Talend is an ETL and data integration tool designed to handle both batch and real-time data. It offers an open-source version, Talend Open Studio, along with an enterprise edition that includes advanced features for large organizations.

Talend’s flexibility and extensive suite of tools make it popular for companies needing robust data integration, especially those with complex data environments that require high compliance and data governance.

How Talend Works

Talend enables both batch and real-time data processing through a graphical interface that allows users to define transformations and workflows. For data quality, Talend’s toolkit includes validation and standardization functions, which ensure high-quality data flows into the data warehouse.

Key Features

- Data Quality Tools: Includes data profiling, validation, and cleansing features.

- Hybrid Compatibility: Works with cloud, on-premises, and hybrid environments.

- Real-Time Data Processing: Supports streaming data and real-time analytics.

- Pre-Built Connectors: Integrates with over 900 systems, including databases and CRM applications.

AstraZeneca uses Talend to integrate clinical trial data from multiple sources, a critical need in the healthcare sector where regulatory compliance is strict. By consolidating data from different regions and trial phases, Talend helps AstraZeneca’s teams quickly analyze trial results, ensuring compliance with industry regulations and speeding up the development process.

7. Hevo

Hevo is a no-code ETL tool designed for simplicity and automation, targeting small and mid-sized businesses looking to set up ETL pipelines quickly. Its automated pipeline builder makes it easy to move data from SaaS applications and databases to cloud warehouses with minimal manual effort.

Hevo focuses on real-time data integration, which suits companies that need up-to-date data for business intelligence.

How Hevo Works

Hevo uses a no-code, drag-and-drop interface where users can select data sources, define transformations, and map destinations without needing programming knowledge. It also provides pre-built connectors, enabling quick integration with common SaaS applications.

Key Features

- No-Code Interface: Simplifies pipeline setup, ideal for teams without extensive technical expertise.

- Real-Time Data Sync: Provides near-instant data updates, useful for dynamic analytics.

- Automated Error Handling: Includes built-in monitoring and error notifications.

- Pre-Built Integrations: Offers connectors for popular sources like Stripe, Shopify, and Zendesk.

Zenefits, an HR technology platform, uses Hevo to sync data from multiple sources, such as billing and customer service platforms, into a single warehouse. This integration enables Zenefits to analyze customer behavior and service metrics in real-time, helping their teams make data-driven decisions on customer retention and support improvements.

8. Portable.io

Portable.io is an API-based ETL tool designed for businesses with niche data sources that require custom integrations.

It focuses on providing a scalable ETL solution that allows companies to create unique pipelines tailored to industry-specific or lesser-known data sources, making it popular in government and specialized industries.

How Portable.io Works

Portable.io connects to niche data sources through custom API configurations, enabling data extraction from specialized or proprietary systems. Once the data is extracted, it can be loaded into traditional data warehouses or used for immediate analysis in business intelligence tools.

Key Features

- Custom API Integrations: Build custom connectors for rare or niche data sources.

- Scalable Infrastructure: Designed to handle data from high-frequency API calls.

- Automated Monitoring: Includes automated tracking for error detection and response.

- User-Friendly Interface: Minimal setup required, with a focus on easy API management.

The U.S. Census Bureau uses Portable.io to extract, process, and analyze data from various state agencies. With Portable’s API-centric design, the Census Bureau can seamlessly integrate state-specific datasets into its national database, which supports population research and demographic analytics.

9. SAP Datasphere

SAP Datasphere (formerly SAP Data Warehouse Cloud) is an enterprise-grade ETL and data warehousing solution optimized for businesses heavily invested in the SAP ecosystem.

Designed to work seamlessly with SAP’s ERP systems, Datasphere supports the extraction, transformation, and storage of vast datasets. Its built-in data governance features make it popular in industries where data security and compliance are critical.

How SAP Datasphere Works

SAP Datasphere uses a layered architecture that combines data ingestion, transformation, and virtualization. It enables organizations to unify data from multiple SAP sources as well as third-party databases, providing an interconnected view of enterprise data. This flexibility allows businesses to perform real-time analytics without moving or replicating all data sources, reducing latency and storage needs.

Key Features

- Native Integration with SAP Products: Directly connects to SAP ERP, S/4HANA, and other SAP modules.

- Fortifying data with built-in governance: A shield against breaches for robust data governance and security.

- Data Federation and Virtualization: Accesses data without duplicating it, optimizing storage costs and performance.

- Scalable for Large Enterprises: Handles large data volumes, ideal for multinational organizations.

Siemens, a global manufacturing and engineering firm, uses SAP Datasphere to integrate data from various SAP systems across its subsidiaries. Siemens relies on Datasphere to consolidate financial and operational data, ensuring consistency in reporting and analytics. This centralized approach has helped Siemens improve decision-making across departments, from manufacturing processes to logistics management.

10. Azure Data Factory

Azure Data Factory (ADF) is Microsoft’s ETL and data integration tool that provides a cloud-based platform for creating and managing data pipelines.

Part of Microsoft Azure, ADF is optimized for hybrid data processing, allowing organizations to process both cloud and on-premises data. It is especially popular among businesses that rely on the Microsoft ecosystem.

How Azure Data Factory Works

ADF provides a centralized interface to create and monitor data pipelines. It allows users to create custom ETL pipelines via a graphical UI, making it easy to design data flows, orchestrate batch jobs, and schedule tasks. With built-in connectors, ADF enables real-time data extraction and transformation from various data sources.

Key Features

- Hybrid Data Integration: Connects cloud and on-premises data for streamlined processing.

- Drag-and-Drop Pipeline Builder: Provides a user-friendly interface for building complex workflows.

- Data Movement and Orchestration: Facilitates ETL, ELT, and big data processing tasks.

- Integration with Azure Services: Works seamlessly with Azure Machine Learning, Azure Synapse, and Power BI, streamlining your data pipeline..

Adobe uses Azure Data Factory to integrate customer data across multiple platforms, such as Adobe Analytics and Microsoft Dynamics. ADF allows Adobe to build a centralized pipeline that synchronizes customer data, enabling real-time customer insights and personalization. By leveraging Azure’s scalability, Adobe has enhanced its customer experience and engagement strategies.

11. Databricks

Databricks is a data analytics and engineering platform that combines ETL, data processing, and machine learning in one cloud-based environment.

Built on Apache Spark, Databricks excels at handling big data and complex workflows, making it popular for companies focused on AI-driven analytics and data science.

How Databricks Works

Databricks leverages Spark’s distributed processing capabilities to transform large datasets. The platform provides a collaborative workspace where data engineers and scientists can share, analyze, and transform data in real-time. With its integrated machine learning capabilities, Databricks supports the entire data pipeline from ingestion to advanced analytics.

Key Features

- Unified Data Processing: Integrates ETL, analytics, and machine learning workflows.

- Scalable Spark-Based Processing: Built on Apache Spark, ideal for large-scale data.

- Collaborative Workspaces: Enables data engineering and data science collaboration.

- Machine Learning Support: Integrates with popular ML frameworks for advanced data processing.

HSBC, a global bank, uses Databricks to centralize and analyze customer transaction data for fraud detection. By applying machine learning models within Databricks, HSBC’s teams can detect suspicious patterns and respond to threats in real-time. Databricks’ scalability is essential for handling the vast amounts of data generated by HSBC’s global transactions.

12. Snowflake

Snowflake is a cloud-native data warehousing solution that integrates ETL capabilities for seamless data storage and transformation. It’s highly scalable and allows organizations to store data in a central, cloud-based environment.

Known for its performance and flexibility, Snowflake is particularly popular with growing companies needing fast access to their data.

How Snowflake Works

Snowflake provides a fully managed data warehouse where users can store data and perform transformations in the same environment. It allows organizations to access data in real-time and perform analysis directly within the warehouse, reducing the need for external ETL steps.

Key Features

- Separation of Storage and Compute: Decouples storage and compute, allowing for flexible and independent scaling.

- Near-Zero Maintenance: Cloud-native, reducing the need for infrastructure management.

- Cloud-native agility: Cloud-agnostic, running seamlessly on AWS, Azure, and Google Cloud. Deploy anywhere, anytime.

- Data Sharing: Allows seamless data sharing across Snowflake accounts.

DoorDash, a food delivery service, uses Snowflake to centralize data from its customer and delivery databases. This setup enables DoorDash to analyze order trends and optimize delivery routes in real-time, improving both operational efficiency and customer satisfaction.

ETL Tools Summary

Future Trends in ETL Tools

The ETL landscape is evolving with new technologies that address the increasing demand for data insights and analytics. Emerging trends in ETL tools reflect growing interest in artificial intelligence, self-service functionality, and enhanced security. Let’s explore some of these trends.

1. AI-Powered ETL Tools:

Automated Data Processing: AI capabilities allow ETL tools to optimize workflows, detect anomalies, and improve data transformations. Informatica uses AI-driven insights to automate complex data transformations, reducing the time data engineers spend on repetitive tasks.

2. Self-Service ETL Tools

Empowering Non-Technical Users: More ETL platforms are introducing low-code and no-code options to make data integration accessible for non-engineers. Hevo’s no-code interface allows marketing teams to build their own data pipelines without technical assistance, helping companies become more agile in data handling.

3. Enhanced Security and Compliance Features

Compliance Integration: With regulations like GDPR and CCPA, ETL tools are integrating stronger data governance features to manage personal data. SAP Datasphere offers data governance tools that help organizations control access to sensitive data, supporting compliance for global companies.

Conclusion

The world of data is constantly evolving, and so are the tools that shape it. The ETL tools we've explored are at the forefront of this evolution, each catering to different business needs.

As the data landscape continues to evolve, so too will the ETL tools that power it. As "data is the new oil," these ETL tools are the refineries that turn raw data into actionable intelligence.

Stay tuned with Cloudaeon for future advancements and innovations in this exciting field.